I’ve been thinking about the Turing Test this week after reading this Wired article profiling futurist and inventor Ray Kurzweil. The following paragraph describes part of the plot from an upcoming movie featuring Kurzweil:

Ramona [a sentient AI] is on a quest to attain full legal rights as a person. She agrees to take a Turing test, the classic proof of artificial intelligence, but although Ramona does her best to masquerade as human, she falls victim to one of the test's subtle flaws: Humans have limited intelligence. A computer that appears too smart will fail just as definitively as one that seems too dumb. “She loses because she is too clever!” Kurzweil says.

So what is actually measured by the test? Here, Kurzweil claims that AI will have problems with the Turing Test because, almost by definition, AI will be more “clever” than humans. However, in the next paragraph Kurzweil suggests that the Test is actually meant to measure “human emotion,” rather than intelligence.

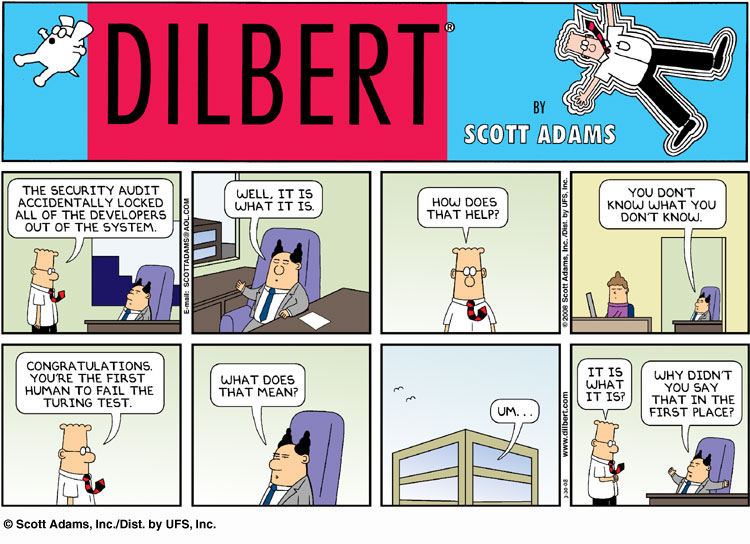

Whether or not the test is meant to measure intelligence or the presence of human emotions, both standards are problematical in that they both depend on language. Consider the example of today’s Dilbert:

In the cartoon, Scott Adams suggests that certain patterns of human behavior, in this case, business jargon, would lead to a failure to pass the test (apparently because that jargon is designed to have the appearance of intelligence, rather than actual intelligence). Which is a great example of the way in which the Turing Test isn’t an absolute method of classifying intelligence, no more than an I.Q. test is. Instead, it is a culturally-situated artifact that attempts to account for a particular conception of intelligence that is accepted at a particular point in history.

No comments:

Post a Comment